Sense of Hearing in AI

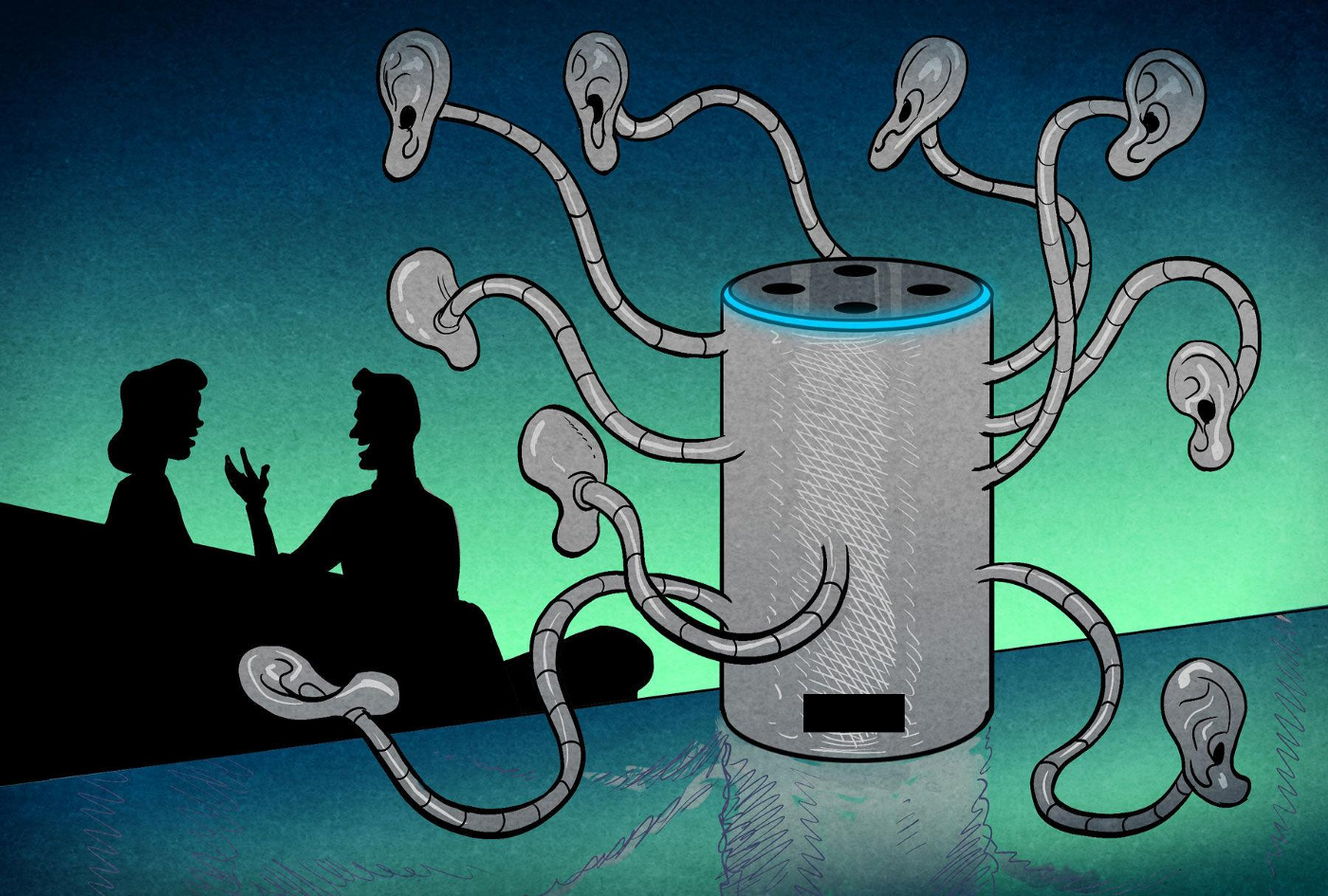

A true AI system should have all five senses: sight, hearing, smell, taste, and touch as humans do. A lot of effort is being made so that AI is capable to understand signals based on these senses. Combining hearing sense or sound signals analysis along with Video will greatly improve the reliability of AI based systems. Our effort is to make AI systems capable to understand and respond to sound as humans do.

Areas and Applications

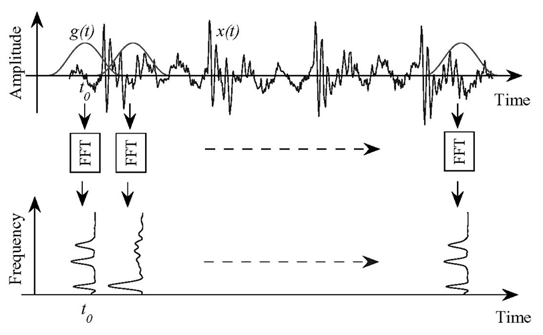

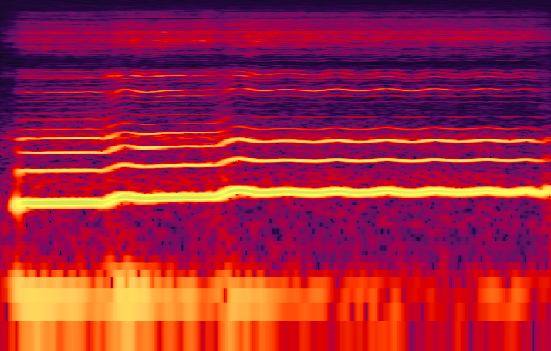

There are mainly three concepts: sound classification, speech recognition, and sound/speech generation.

Our Work -

Future Work -

As humans we can understand that Hearing is very important sense for us. After hearing a sound how fast our nervous system processes it and respond to it. And these responses are how much reliable to us. Let’s understand the concept-

We can understand it by an example “All colleges and schools are under loackdown in Delhi because of...........” If this sentence is asked in front of any person in the country then definately, the answer would be corona virus according to the current situation. Here summer, pollution, riots can also be correct answer but not that much close to current situation. This is how our neurolingustic works on the basis of previous knowledge and situation. With the help of Natural Language Processing, we Intent to teach AI about part of speech so it can fill in a suitable answer to complete the sentence and enhance our system to use it’s short term memory to answer appropriately.